I once overheard an elevator conversation between two managers. One of them was talking about a direct report:

“He’s not ready to be promoted. Every time I ask him a question, I get this long-winded answer that’s loaded with unnecessary details… ”

This manager was saying out loud what a lot of managers and executives are thinking (but rarely give as direct feedback). Too much detail doesn’t make you seem more capable — on the contrary, it makes you look junior.

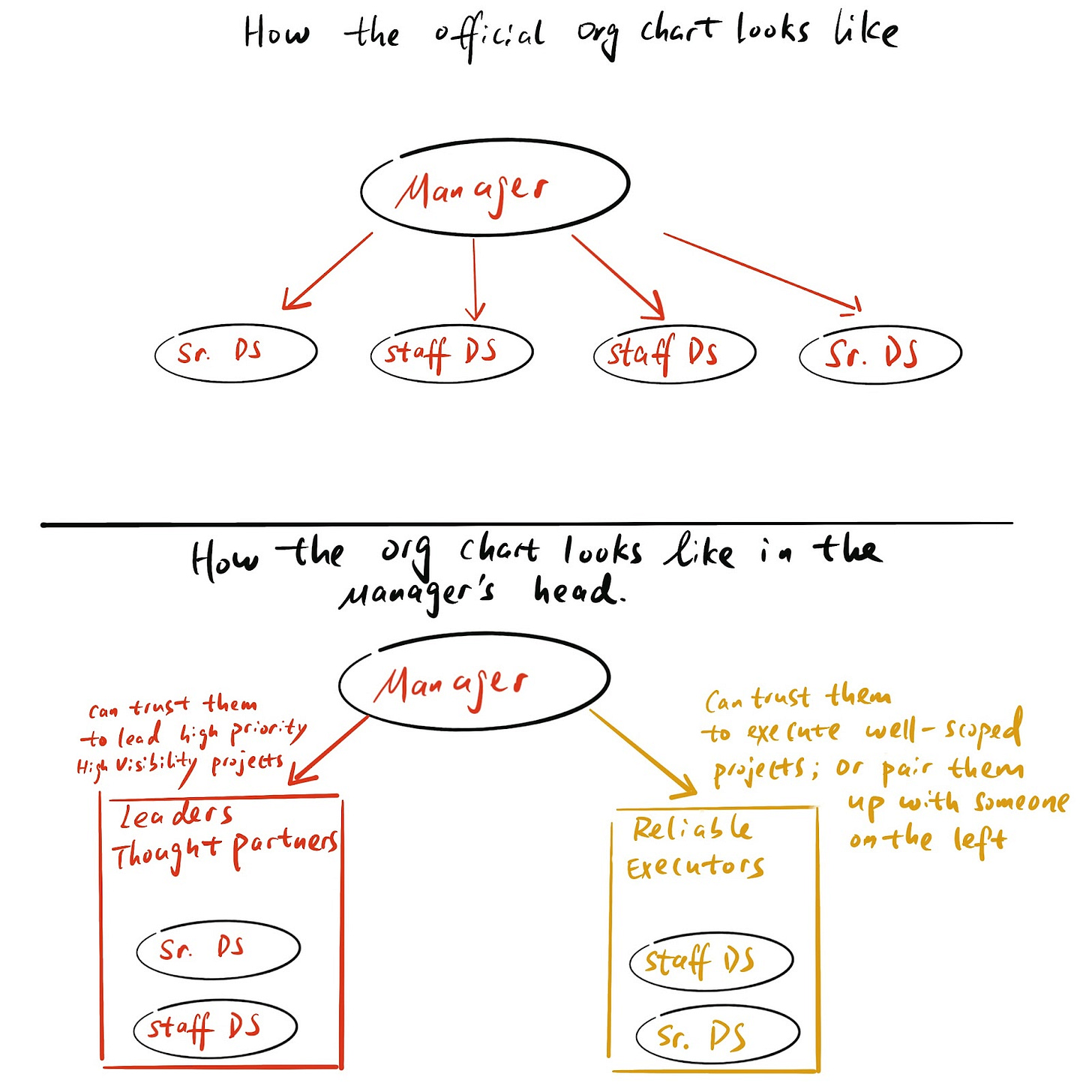

As a manager, I have led teams and coached a lot of bright DS in my career. Part of a manager’s job is to determine, among a team of bright people, who to pull in for what work.

Managers can usually quickly form a “gut feeling” about who they can bring in to lead cross-functional work streams independently versus who can execute but isn’t ready for leadership yet — whether formal (e.g. as a tech lead) or informal (leading a project).

So what are the key distinguishers between these two groups of individuals? After talking to a lot of managers, I’ve noticed there is a consensus:

It’s usually not the ability to do technical work (surprising, I know), but rather certain behavioral/communication patterns that make people perceive you as a lead, take you seriously and pay attention to what you have to say.

In this article, I will break down some of the key things that will make you appear junior, convince you why it’s important to improve on them and teach you how to do exactly that.

Four behaviors that make you seem junior — and what to do instead

Junior trait #1: Providing too much details/over-explaining

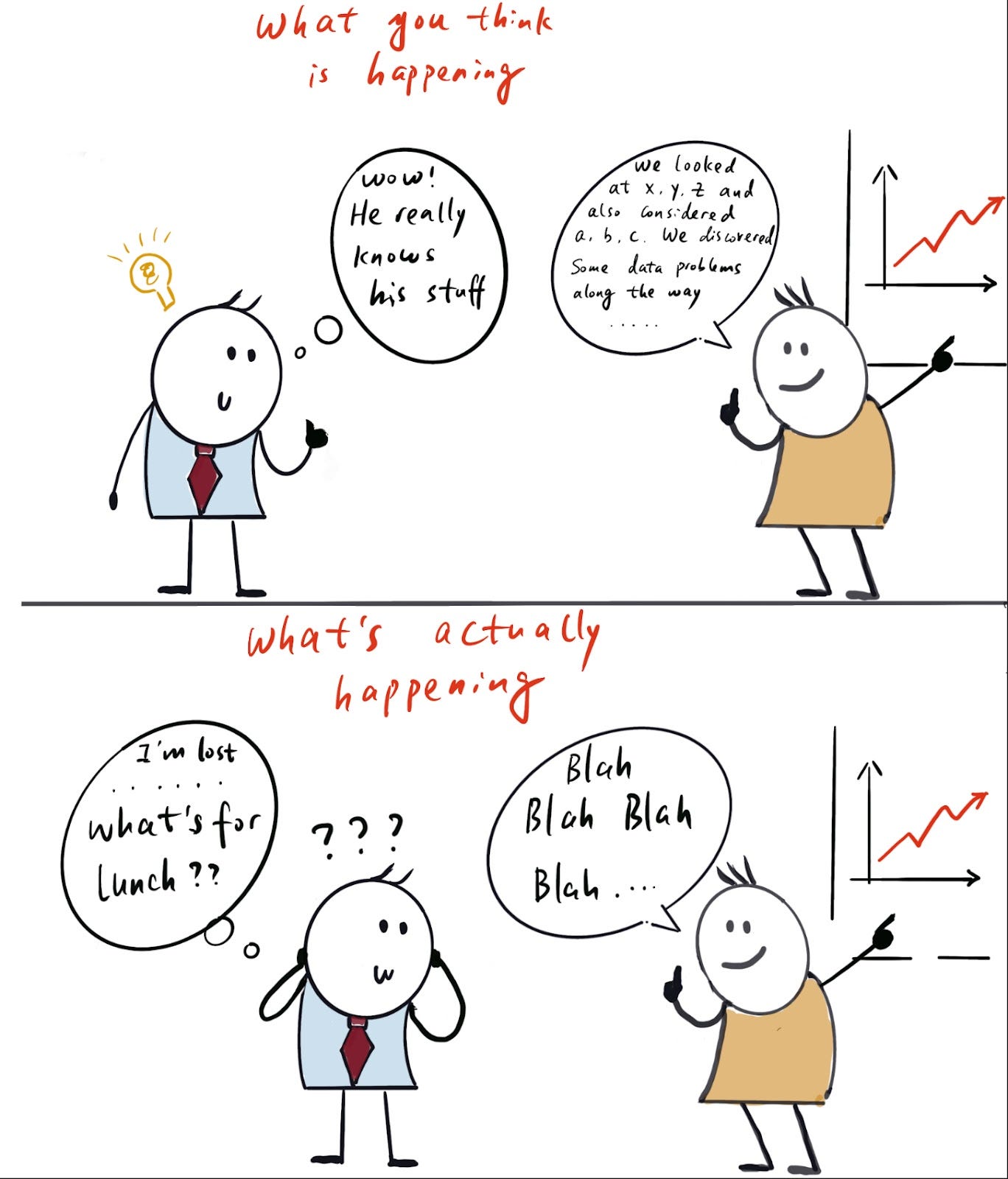

This is innate to analytics folks since the field requires us to pay attention to details. As a result, a lot of junior DS think providing a lot of details will showcase that they have considered a lot of the edge cases and give the audience confidence that they have a lot of knowledge about the field.

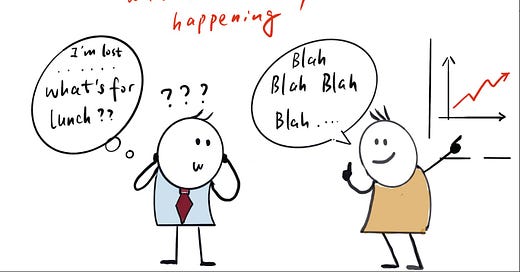

In reality, though, too much detail will often confuse your audience and make you look like you don’t know how to synthesize information. As a result, you will lose people in your communication and cause them to miss your key points since they are buried in all the details (think the VP who starts to scroll on his phone when you get into the nitty gritty of how you pulled the data in SQL).

I have literally had stakeholders telling me “Don’t put this person in front of execs because they will confuse everyone”.

This might sound harsh, but everyone who has ever been in a large corporate meeting knows how inefficient they are even when things are going well. That means as a manager you cannot afford to add additional friction and confusion by having a team member lead the discussion who is not ready.

Don’t get me wrong, it is crucial to consider all the edge cases and pay attention to details during your analysis. But that doesn’t mean all those details and edge cases are worthy of being communicated to others when you present your work (unless they specifically ask about them).

What to do about it

This should be practiced in both written and verbal form.

In written form, try to summarize your work in a TL;DR (a brief blurb highlighting the most important takeaways) that you put at the top of your document or email.

Ideally write your TL;DR using the Pyramid Principle: Start with the conclusion related to the business question you want to answer, and then add key supporting evidence as needed.

Remember, being able to decide what NOT to communicate is as important as deciding what to mention.

For everything you want to communicate, ask yourself if it’s “important enough”. If not, put it in the appendix in case someone asks but remove it from the summary.

For example, imagine that during your analysis you discover a data issue that applies to 0.1% of the member base. Ask yourself: “Does this data error fundamentally change the conclusion of my analysis and/or my recommendation?”

The answer is most likely “No”, so only bring it up when asked.

In verbal form, practice talking about your project with peers who are not deep in the weeds of the work with you to see if your summary confuses them. And when crafting this verbal summary in your head, imagine you are doing an “elevator pitch” to the CEO – giving him/her a good enough description of your work in less than 30 seconds that makes them deeply understand the “why” of your work and gives just enough context on the “what” so that they can form an opinion.

Junior trait #2: Not having an opinion or recommendation

When asked “Based on your analysis, what would be your recommendation?”, one of my former team members loved using the phrase “I have no dog in this fight”. This might not seem like a big deal, but it’s actually an example of one of the most common career-limiting traits of data scientists.

The more senior you become as a data scientist (and the more AI replaces the “execution” part of the job), the more important it becomes to translate your analysis into a recommendation for your stakeholders.

But it’s not surprising that junior DS struggle with this part. Forming an opinion or recommendation takes more than just pulling the data; it requires digesting and understanding the data and connecting it to the needs of the business. And let’s face it, when you’re deep in the weeds of a 500-line query or a complex data pipeline, it’s easy to lose sight of the bigger picture. So a lot of people do a great job executing the data pull and then leave it to their audience to make sense of it.

The other reason a lot of data scientists or analysts are hesitant to give a clear recommendation is the perceived risk involved. Hedging is deeply wired into our risk-averse analytical brains.

This makes sense, since data is nuanced and nothing is ever completely black-and-white. But this can backfire when you’re working in a fast-moving environment like a startup and becoming a blocker because you’re drowning your audience — who are trying to make a decision — in caveats.

Every decision comes with risks, and every recommendation has caveats. But putting too much emphasis on them results in analysis paralysis — gathering more and more data and doing more and more analysis, hoping the decision will make itself clear in the process.

Ultimately, not giving recommendations shows a lack of ownership of the problem and lack of the ability or willingness to make sense of the data.

And you’ll be limited to simple “execution” work as a result.

What to do about it

First and foremost, adopt an ownership mindset.

Most of us are able to make decisions and have strong opinions when it comes to things we care about. For example, you were able to decide where to travel last time you planned a trip, right?

You probably gathered information about weather, flight tickets, reviews etc. to decide between a couple of options. And despite all that complex information, you landed on a clear decision in the end. Why would business decisions be different?

Imagine that you’re the decision-maker and have to make this call:

What data would you need?

And once you pull those data points, ask yourself: Would you convinced by the data presented?

If not, what else can possibly convince you?

Just don’t fall into the trap of thinking that gathering more and more data is the solution when you’re stuck. In a lot of cases, what we actually need is to digest and make sense of the data we already have. 1,000 different data cuts will only confuse people and take them further away from reaching a decision since each cut is telling a slightly different story and it’s difficult to make sense of them all.

And if the fear of risk is what’s stopping you from having a recommendation, remember this: The only way to generate above-average returns in financial markets is by taking risks; risk-free strategies only yield the low risk-free rate of return. The same applies at work; if the decision were crystal clear with no uncertainty or risk involved, nobody would need you to make a recommendation. The value you add comes from giving robust recommendations despite nuance and ambiguity.

Don’t get me wrong, you should definitely list out the caveats of your recommendation. But trust me: Most people prefer that you have a recommendation that they don’t agree with instead of having no recommendation at all. Because if you do have a recommendation and list out how you arrived at it, they can dig in to see which of your assumptions and decisions they agree with and which ones they don’t.

At the end of the day, data teams are not paid to only pull and present data; we are paid to help drive business decisions with data.

So if you always leave the mental burden of digesting data and making the decision to your audience or others on your team, you limit yourself to being a junior analyst instead of a true thought partner.

Junior trait #3: Not being clear about the “why” behind the analysis

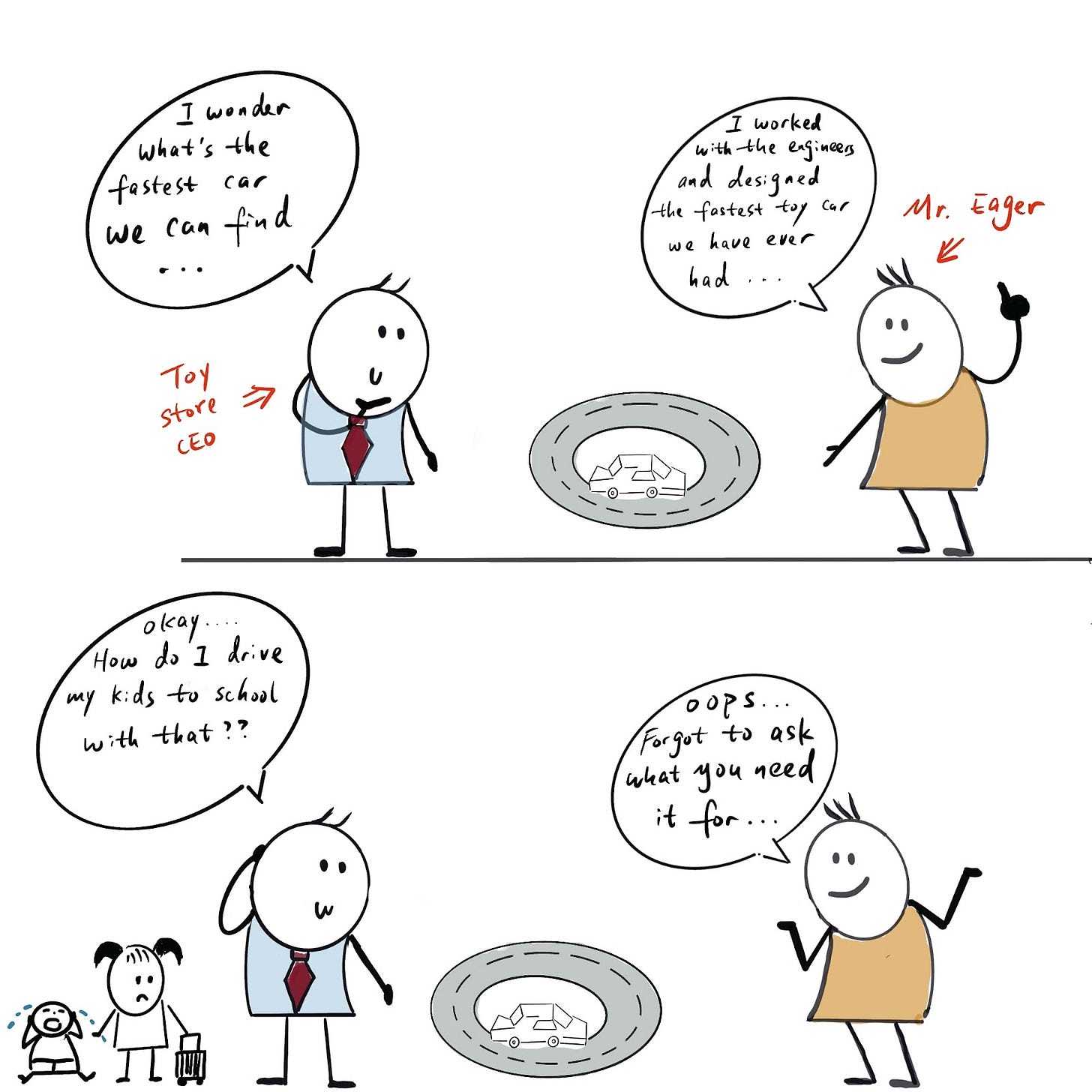

I can’t count how many times — after I talked to my team about an analysis — I felt like we were solving the wrong problem.

When I ask the individual “Why are we doing this analysis? What’s the business decision behind this that we are trying to drive?”, the answer I usually get from junior DS is “XYZ stakeholder asked for this”.

That should never be the only reason you are working on an analysis.

The interesting thing is, one of the most common complaints I hear from data scientists is being treated as a “data puller”; but when involved in an analysis, a lot of junior analysts voluntarily demote themselves to “data pullers”.

They are so laser-focused on finding out what data they need or what data cuts they should produce that they leave the big picture questions like “What problem are we trying to solve?” and “Why is it important to solve this problem?” to their managers or tech leads to figure out.

Whenever this happens, it serves as a signal to me, the manager, that this person is not ready to run projects independently because I need to go back to the stakeholder to inquire about the motivation of the analysis.

So why is this so problematic, especially if you want to grow into a lead role?

It goes back to the ownership mindset. Not knowing the “why” behind the analysis means you are not taking ownership of the business problem. More importantly, not being clear about the why means not being clear about the problem space, which severely hinders your ability to deliver the most effective solutions.

People who don’t have a deep understanding of the problem space often conduct analyses that don't answer the exact question stakeholders have in mind. These stakeholders will then ask for additional data, hoping that it will help them answer the original question. As a result, the analyst gets frustrated because the “data requests” constantly change, and a vicious cycle starts.

What to do about it

Similar to my suggestion in the last section — start by owning the problem. If your stakeholder tells you “I’m trying to understand how many people used our search function in the past month”, instead of directly jumping into pulling the number, you should try to find out why they need that data. What decision are they trying to make?

Once you understand the ultimate problem your business partners have in mind, you can help them brainstorm the best data that can be used to solve that problem (and trust me, in a lot of cases it’s not the original data they asked you to pull).

This way, you are truly elevating yourself to be a thought partner and owner of the problem, not just somebody who executes data pulls. If you find yourself struggling with this, The Operator’s Handbook has an in-depth guide on how to deeply understand a problem space and find the most effective solution from there.

Junior trait #4: Not having the basics down to be able to stay “one step ahead”

Imagine an economist is giving an interview about their opinion on the tariffs, but stumbles when asked “Roughly what % of American goods are imported?”. Would you trust anything they say afterwards?

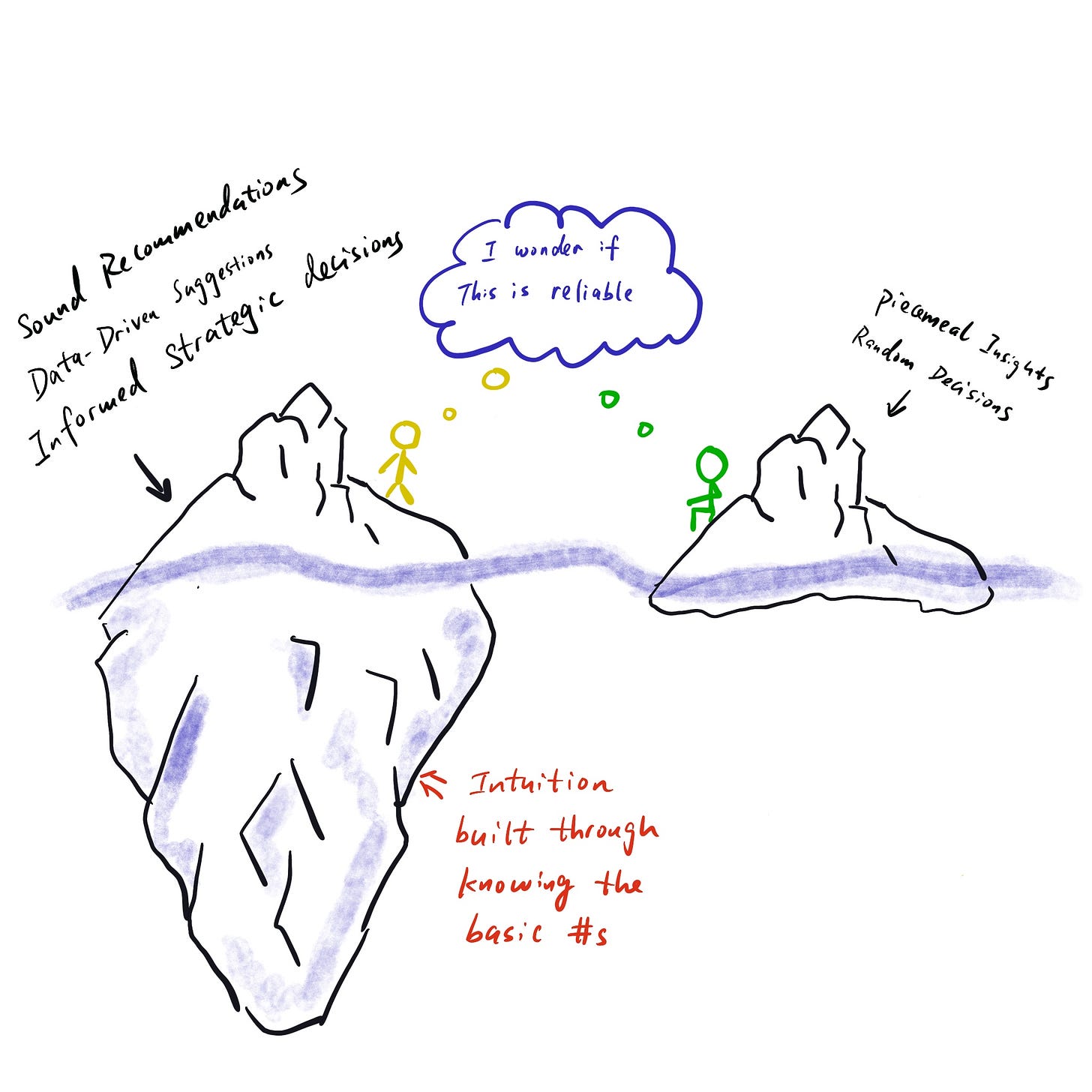

Probably not. Similarly, the easiest way to lose credibility as a data scientist is when people feel like you don’t really know the data you’re working with or the area of the business you’re covering. For example, if you’re doing a deep-dive analysis into user behavior on your company’s platform, you should know at the top of your head roughly how many active users the platform has.

If you want to establish yourself as an expert in an area, you need to stay “one step ahead” of your stakeholders. If you are the POC for a topic, you are expected to be the most familiar with the data (even compared to your manager) and have an answer ready for the most common questions people might have.

Don’t fret, I’m not saying you need to be able to anticipate every single “follow up” question that people may have. But imagine you’re presenting an analysis and share the fact that more than 80% of your product’s users are based in the US.

Most of your audience will naturally wonder things like:

“What are the other major countries besides the US?”

“Does the US also account for 80% of DAUs and revenue, or a larger or smaller share?”

If you don’t have an answer for any of the “natural follow-up questions”, it will make people wonder whether you really spent enough time to understand and explore the data.

What to do about it

Try to be curious about the data you’re working with. Start with a basic question, and then explore the data from there.

For example, imagine you work at Facebook and are analyzing marketplace data. The first thing you check is how big the product is and you find that “10% of all Facebook DAUs use FB Marketplace”.

What natural follow-up questions could you explore from here?

For example, you might want to check how this number has changed over time, and how that 10% compares to other product surfaces. Let your curiosity carry you a little bit and jot down the answers to these questions. The key is to deeply understand the context of the data you plan to present without wandering too far from the key question you’re trying to answer for the business. It’s a tricky balance, I know.

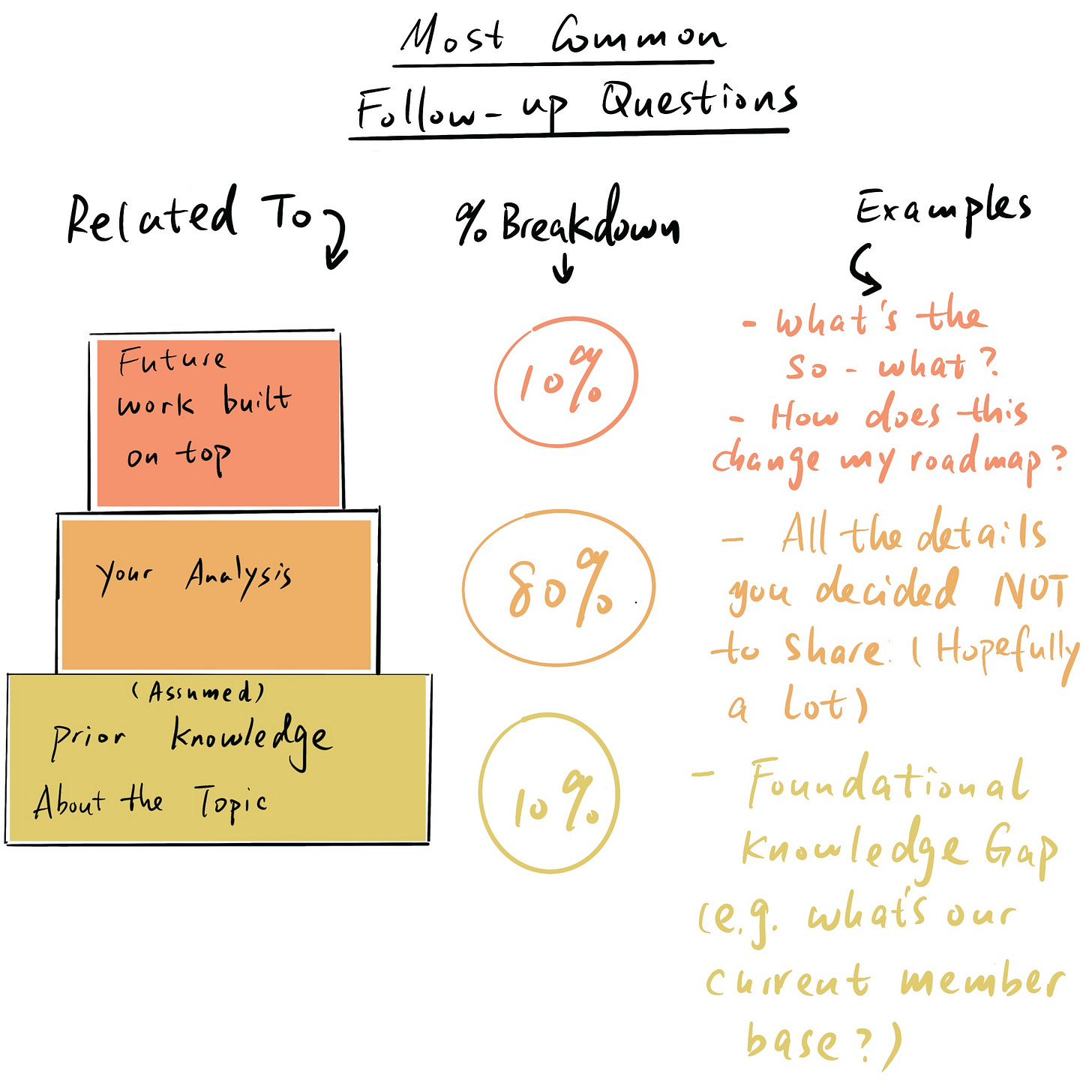

Generally, you can expect follow-up questions from stakeholders to fall into three buckets:

Foundational knowledge: Different stakeholders will have different levels of pre-existing knowledge on the topic you’re presenting. Some of them might even have very basic questions (e.g. how the product works, what steps the onboarding flow has, how many users there are etc.)

Your analysis: You should always share only the most important insights from your analysis rather than dumping everything you did on your stakeholders. However, stakeholders might ask for more detail on certain aspects, and you should be prepared to elaborate when needed

Next steps: Stakeholders will often be curious about what your analysis means for them. What are the recommended next steps? What changes should they make based on your findings?

Think through these three areas before you present an analysis, and you’ll be able to anticipate many of the questions you’ll get.

Lastly: Get a second pair of eyes on your work (or use ChatGPT) before you present to a broader audience. Ideally, this person is someone who’s not as deep in the weeds of the analysis as you are; this will help you find any obvious things you missed because you were too close to the data.

Conclusion

A lot of the “symptoms” mentioned in this article represent a common theme — lacking an ownership mindset. What establishes you as a lead in an area is not the title you have or how long you have been working in that area; it’s the mindset you adopt and how you take responsibility for owning and solving a problem.

So if you want to be perceived as a trusted thought partner instead of a junior IC who can only help pull data, put yourself in the shoes of the decision makers and treat the decisions with the same level of rigor as the ones you care deeply about.

Providing too much details/over-explaining is so underrated it’s almost a career crime.

The more senior your audience, the fewer words you should use.

It’s as if the air gets thinner the higher you climb the corporate ladder: words are oxygen, so we should not waste them.

People take you seriously when they see you differently—not just when you speak louder.

The real shift is helping them reframe your role. From “supportive teammate” to “strategic thinker.” From “helpful” to “essential.”

You don’t need to crank the volume. You need to change the channel they’re hearing you on. Background music doesn’t get center stage.